HelloFresh is continuously refining the customer experience with the website or the mobile applications. We validate most of the changes we introduce using experimentation: changes are delivered to a portion of our customers, and are only delivered to more of them if the feedback is positive. Creating and maintaining these experiments has been a challenge in the company for a long time. Recently, I took part in a complete redesign of the experimentation infrastructure at HelloFresh. I'd like to share that story.

Before we continue, there is a bit of vocabulary that can be helpful to better understand the article:

- "A/B testing": Comparing one or more variations of a webpage (or pages) against each other and measuring how the two variations perform for specifically defined metrics.

- "Audience": Group of visitors who share a characteristic, such as the way they came to your page or the browser they use. You target experiments based on audiences.

- "Activate": To record user's impressions on an experiment.

- "Experiment": An A/B, multivariate, or multi-page test. Also called a test or a campaign.

- "Variation": Alternate experience that is tested against the original (or baseline) in an A/B test.

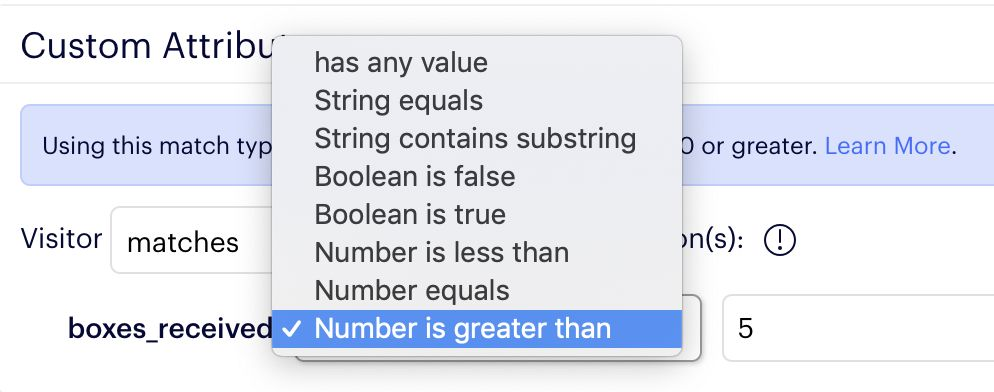

- "Customer attributes": Customer attributes are used to create audiences for the experiments. For example, the number of boxes received could be used to display guidance elements to new customers.

All good? Let's go.

A brief history

-

Until 2018, there was no consensus regarding the experimentation framework or infrastructure. Teams were free to use whatever tools they judged best, some created their own.

-

In May 2018, after an initial testing phase, HelloFresh elected Optimizely as the unique experimentation framework going forward. Their SDK can run experiments without making blocking network requests to an outside API, ensuring microsecond latency.

-

In July 2018, the integration with Optimizely is available within the API monolith. Teams start using it and immediately face challenges.

-

In September 2018, engineer leads agreed on building an "experimentation" service, intended to work on top of Optimizely to ensure the correct experimentation setup. A product owner and backend engineers are allocated.

-

By January 2019, over 80 experiments were conducted, but developers complained about the growing maintenance pain, and product owners complained about the time-cost of creating new experiments. Besides, challenges reported at the beginning of the project haven't been addressed yet.

-

In February 2019, senior management advanced the hypothesis that we've overcomplicated the way we set up experiments. A tiger team is assembled to make the objective assessment of what is the ideal way to implement Optimizely, using the recommendations provided by their solution architects.

A new hope

We were a tiger team of five: the product manager and the lead developer of the experimentation team, and chapter leads: one for the web, one for the mobile apps, and me for the backend. We had a month to come up with a redesign and a working implementation for demonstration.

We started the project with a list of grievances:

- In many cases, the workflow of the experiments was broken and unreliable: users were considered participating in an experiment, although they may not have been presented with the experiment. Analysts had to clean up the metrics with other sources. The user journey was challenging to trace.

- Experiment definitions were spread over multiple services. Product owners require the assistance of developers to manage experiments.

- Experiments in backend services were complex and timely to implement and maintain.

- Experiments caused a lot of flickering in the frontend, which made for a poor user experience.

- There was no way to preview experiment variations.

After collecting the findings from the previous design and asserting its shortcomings, we came up with a few goals:

- We should manage experiments in a single place.

- We should be able to retrieve customer attributes without involving the API monolith.

- We should be able to determine variations using Optimizely's SDK alone (zero-cost).

- We should be able to use Optimizely's SDK no matter the programming language.

Manage experiments in a single place

Adding an experiment was a lot of work. Not only did we have to create the experiment in Optimizely's admin, we also had to add an entry in our remote configuration service, and add code to support the experiment in the API monolith. A lot could go wrong.

Some features we found in the API monolith, we also found in Optimizely admin, such as enabling/disabling an experiment. But others, such as comparing for "less than" or "greater than", weren't available. Luckily, we were in direct contact with Optimizely's solution architects, and they gave us access to a development version that drastically expanded attribute comparison operators.

Basically, the conditions were there to remove all custom code and manage experiment from Optimizely's admin only.

Retrieving customer attributes without involving the API monolith

Customer attributes are used to create audiences for the experiments. For example, the number of boxes received could be used to display guidance elements to new customers. A lot of attributes were available in the API monolith, but after collecting them from the 80+ experiments, we found very few were actually used.

The following illustration demonstrates the actual usage of customer attributes:

We got rid of everything unused and came up with a curated list of attributes. The following JSON is an example:

{

"active_subscription_count": 1,

"active_subscription_skus": "DE-***-**",

"boxes_received": 101,

"country": "DE",

"created_timestamp": 1452598741,

"created_week": "2016-W02",

"first_subscription_timestamp": 1478600297,

"first_subscription_week": "2016-W45",

"user_id": "DE-2****4"

}I created a basic service to provide these attributes without involving the API monolith. The attributes can be cached for some time and be reused for any number of experiments. After the initial query, Frontend applications would be able to determine variations at zero-cost, removing the unpleasant UI flickers.

Using Optimizely's SDK no matter the programming language

The experimentation framework and the experiments were living in the API monolith, no other service had access to the Optimizely SDK because the implementation was sometimes impossible. For example, their SDK is not available for Golang, which excludes most of our services. The challenge was to provide access to the SDK no matter the programming language and (again) remove the API monolith from the picture. Since the JavaScript SDK was the best available option, I created a Node.js service to wrap the SDK.

The following diagram represents one of the complex interactions we had at the time. Menu experiments were configured in a ServiceA and had to be retrieved in order for the API to figure out the experiment and the variation, before it could call ServiceA again to get the variation menu. And because ServiceA needed to know the variation, a shared database was used as exchange.

With the redesign, ServiceA has access to Optimizely and can determine variations without leaking implementation details outside the service:

Comparing designs

Comparing experimentation flow

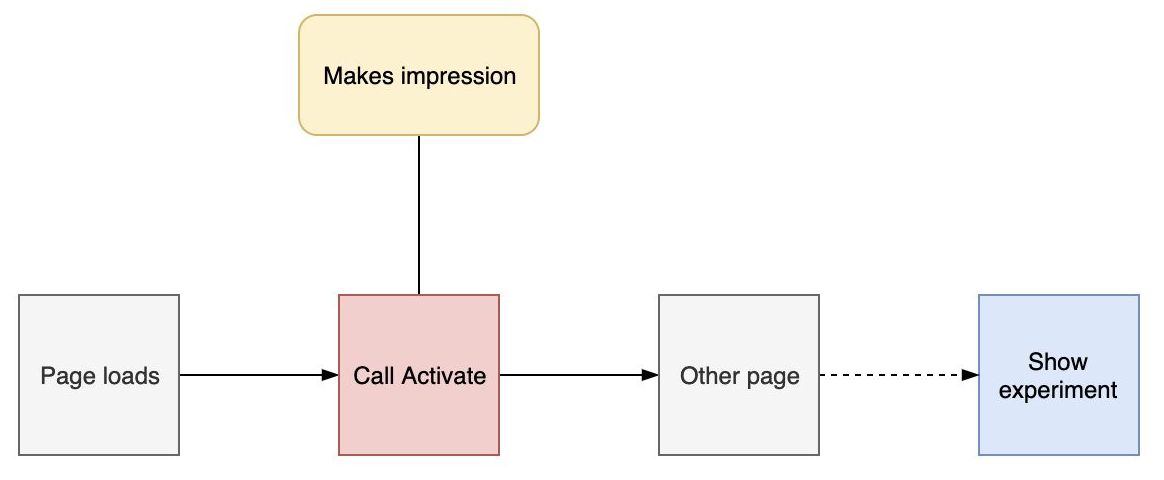

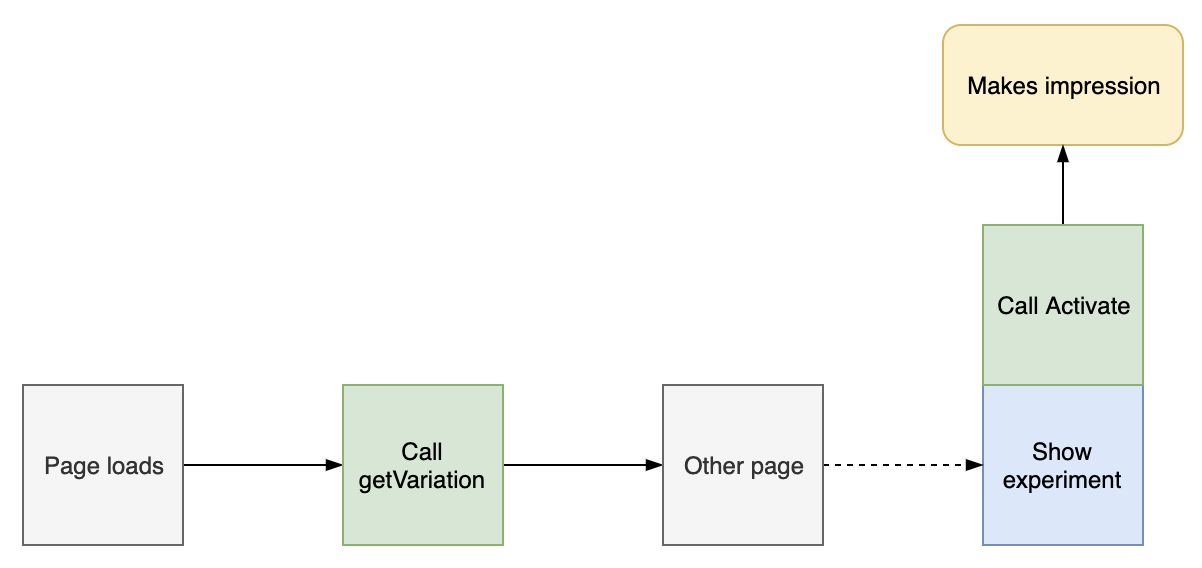

In the previous flow, we activated users when they entered the flow regardless if they were exposed to the experiment or not. This flow limited the available traffic for other experiments (you don't want a user to be subjected to multiple experiments), but also created a massive discrepancy of data, forcing us to clean up using other data sources.

With the new design we're able to determine a variation without making any impression that would skew the data. Now, we only activate a user when the experiment is presented. All of this happens with zero latency thanks to Optimizely's SDK.

Comparing architectures

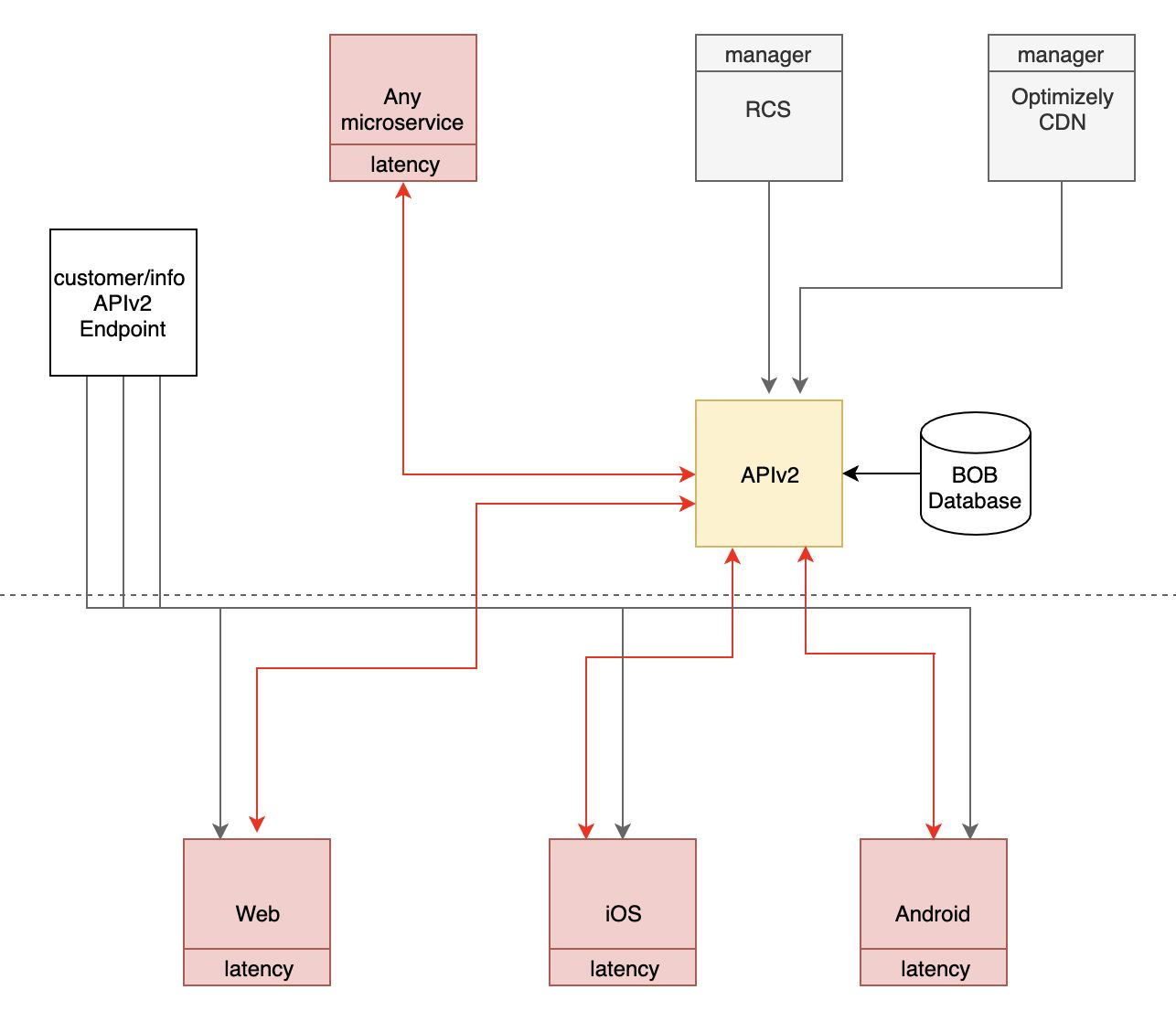

HelloFresh elected Optimizely because the SDK can run experiments without making blocking network requests to an outside API, ensuring microsecond latency. The SDK works with a JSON representation that contains all the data needed to deliver and track your features and experiments. That is what they call the datafile.

The previous implementation only lived inside the API monolith, probably because that was the only place where attributes required to determine the variation could be retrieved. Applications interested in determining a variation couldn't benefit from the zero-cost feature provided by the SDK and had to call the API, with added latency. In the backend, where network communication is fast, latency might not be so bad, but it is an entirely different story for frontend applications, especially mobile ones.

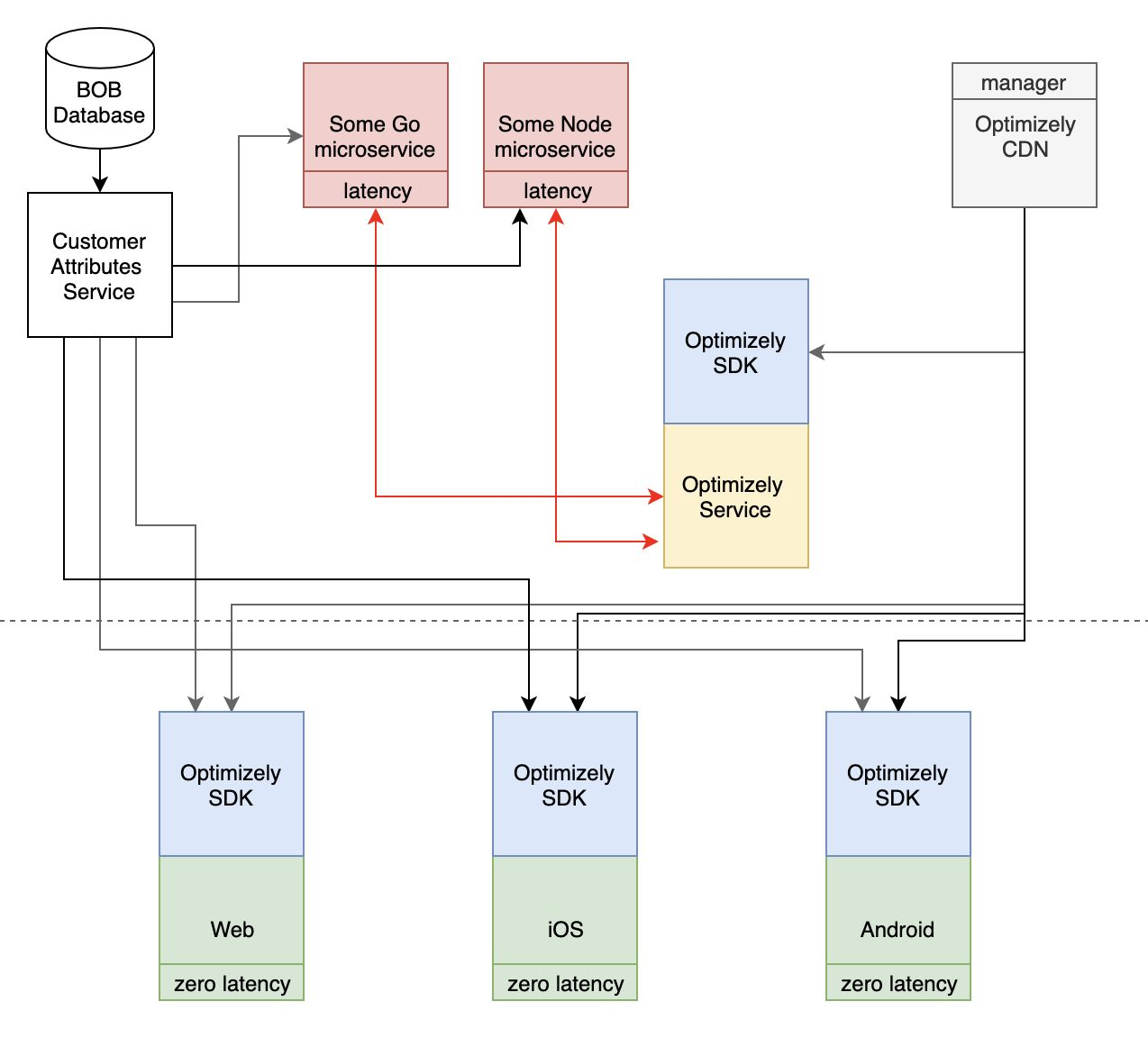

The following illustration highlights the latency issues on all applications:

With the new design, customer attributes can be retrieved from a fast dedicated service, without involving the API monolith, and Optimizely's SDK can be used no matter the programming language. Latency has been completely removed from the frontend applications. There is still some latency in the backend service, but that is something we can afford because the internal network is fast.

Conclusion

With a new service providing curated customer attributes and another to wrap Optimizely's SDK, we're now able to run experiments anywhere, without involving the API monolith. With Optimizely's zero-cost variation finally enabled, the UI no longer flickers. By making use of Optimizely features and new comparison operators, we removed extraneous code and configurations, and product owners are now able to manage experiments without involving developers. Finally, we now have accurate metrics, and we can better understand the user's journey, which should allow us to provide better experiences in the future.